Beyond the Chatbot: Achieving Radical Innovation Velocity in the Age of Agentic AI

In our previous post, we explored the first pillar of Digital Synergy: adaptability. The speed at which you adapt is equally important as the effectiveness of your adaptation. In this post, we shift our focus to the second pillar of Digital Synergy, which is innovation velocity. Innovation velocity can be defined as “the pace at which an organization can sustainably conceive, build, integrate, and scale new value-generating solutions.” It is the speed with direction that ultimately leads to synergy, rather than silos and projects that drain resources. To make the thought process relevant to the current needs of the industry, we will focus this post specifically on the most prominent of the applied GenAI patterns today - Agentic AI.

The Shift from 'Tool' to 'Teammate'

In his work, 'Agentic AI[1], Pascal Bornet defines 'Agentic AI' as "autonomous AI systems that can proactively and independently pursue complex goals". The strategic implication is profound: this is a shift from AI-as-Tool to AI-as-Teammate. An organization does not "run" a teammate like a piece of software; it delegates outcomes to it. This shift from "tool" to "teammate" is the single most important concept for understanding innovation velocity. It fundamentally breaks traditional IT governance, software development lifecycles (SDLCs), and "Human-in-the-Loop" (HITL) processes. The primary organizational bottleneck for Agentic AI is no longer development time; it is the willingness and ability to grant autonomy safely. Velocity becomes a function of trust.

This agentic velocity, however, would need a holistic end-to-end transformation built on three foundations.

Agent-ready Architecture: A move from deterministic, predictable workflows that call an LM as a tool for a specific task to a, dynamically adapting LLM-driven system for planning and executing tasks to achieve a goal [2]. The architecture involves a framework for an autonomous agent, allowing it to "perceive" and "act."

Agent-ready Operations: A shift from traditional MLOps to a new discipline of "AgentOps" for managing the lifecycle of autonomous entities. This is coupled with a cultural shift from "Human-in-the-Loop" (HITL) bottlenecks to "Human-on-the-Loop" (HOTL) governance, enabling trust and delegation.

Agent-ready Governance: A framework that redefines risk management. It moves beyond the false "speed vs. safety" trade-off by embedding ethical guardrails and compliance as automated code. This "Governance-as-Code" is the prerequisite for trust, and trust is the prerequisite for velocity.

The Trinity of AI: Decoding the Core Agent Architecture

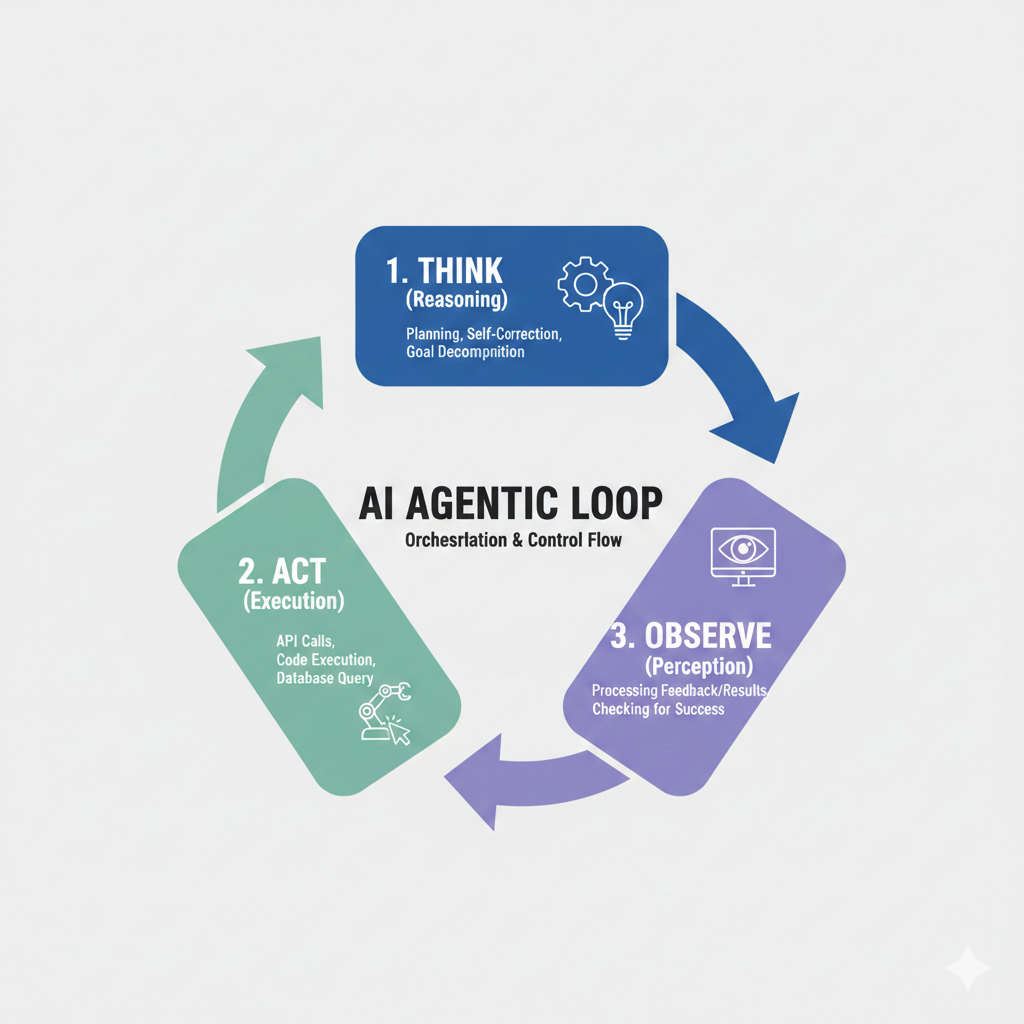

The core architecture of an AI agent is composed of three essential components: the Model, the Tools, and the Orchestration Layer. The Model, or the "Brain," is the central reasoning engine, typically a Language Model (LM), that processes information and makes decisions. The Tools, the agent's "Hands," are mechanisms like APIs, code functions, and data stores that connect the reasoning to the outside world for retrieving real-time information and executing actions. The Orchestration Layer, the "Nervous System," is the governing process that manages the entire operational loop, handling planning, memory, and deciding when to reason versus use a tool in a continuous "Think, Act, Observe" cycle. Together, this seamless integration allows the agent to move beyond content generation to autonomous problem-solving and task execution.

Think, Act, Observe Cycle

From MLOps to 'AgentOps': The New Management Stack

Agentic AI requires an "emerging discipline" known as "AgentOps". AgentOps is designed to manage the entire lifecycle of autonomous entities. The "asset" being managed is the agent itself—its goals, its memory, its state, its "toolbox" of APIs, and its collaborative behaviors with other agents.

This new stack of tools is the "factory floor" for building and scaling the digital workforce. Its functions are radically different from MLOps and include:

Orchestration: Managing complex interactions between multiple collaborating agents. This capability is deeply informed by frameworks originating from Google and Google DeepMind, which utilize "Societies of Agents" or "Debate Architectures." These approaches leverage a team of specialized agents—like a 'Coder Agent' and a 'Tester Agent'—to collectively solve problems, yielding higher quality and more robust outcomes than a single agent operating alone.

Monitoring: Tracking agent behavior, goal-alignment, and state, not just model accuracy. This includes monitoring for "emergent behaviors".

Governance: Enforcing rules, managing agent "memory," and ensuring autonomous actions stay within pre-defined guardrails.

Beyond Traditional Agile: From 'Tasks' to 'Missions'

For two decades, Agile and DevOps have been the gold standard for software velocity. However, their "rigid ceremonies" and "human-centric planning" are not designed for managing autonomous "teammates".

This new paradigm requires a shift in the fundamental "unit of work." In Agile, the unit of work is a "user story" or "task," which is estimated in "story points"—a proxy for human effort. This is obsolete when one of your "teammates" is an agent that operates at machine speed and does not "work" in story points. The new model is the "mission-based team". This is a hybrid team of human experts and AI agents, co-located to achieve a common goal. This team is not given a "backlog" of tasks; it is given a mission (e.t, "reduce customer churn by 5%," "decrease order fulfillment time by 15%").

New Governance: The 'Human-on-the-Loop' (HOTL) Model

"Human-on-the-Loop" (HOTL) model overcomes the disadvantages of the Human in the Loop (HITL) model, where a human must approve every critical action, which is the enemy of Agentic AI velocity. HOTL is a governance framework where humans set the 'rules of the road' (the guardrails), monitor agent performance, and only intervene on exception. HOTL shifts the human role from an approver (a bottleneck) to an overseer (a designer). The human "AI Safety & Governance Specialist" works with the team to define the "sandbox" within which the agent is pre-approved to operate autonomously. Velocity is thus a function of how well an organization can design, implement, and trust its HOTL frameworks.

Navigating High-Velocity Risk: Ethical Guardrails for Agentic AI

For Agentic AI, safety is velocity. The only way to unlock velocity is to directly address the fear of a ”rogue agent" violating data privacy, breaking laws, or being manipulated via a new attack surface. The solution is "Governance-as-Code - a framework of framework of "robust ethical guardrails and auditable compliance frameworks" is not a "nice-to-have" or an inhibitor. It is the central business case for the entire transformation. It is the mechanism that builds the organizational trust required to delegate autonomy. The governance rules—the ethical guardrails, the data privacy rules, the compliance frameworks, and the "agent alignment" checks—are not a document. They are code, "automated," and "embedded" directly into the AgentOps platform and the HOTL framework.

An agent cannot (is programmatically blocked from) accessing PII/PHI.

An agent cannot take a financial action above a certain threshold without automated flagging.

An agent's goals are continuously monitored for "alignment drift" (i.e., when an autonomous system optimizes for a metric that is unintended or harmful to the overall business goal).

By automating the guardrails, the organization eliminates the need for manual, speed-killing reviews.

Conclusion: The Velocity Imperative

The initial wave of Generative AI was about awe. The current wave of Agentic AI is about action.

Achieving innovation velocity today means moving beyond viewing AI as a tool for content creation and viewing it as a framework for autonomous execution. By leveraging the cognitive architectures—reasoning, planning, tool use, and collaboration—enterprises can stop just talking about innovation and start engineering it at scale.

References

The Anatomy of Adaptability: Key Tenets of Organizations That Evolve

A business professional at a crossroads between outdated technology and digital innovation

Introduction: The Fall and Rise of Giants

In 2007, Nokia sits atop the mobile phone world, basking in a 49.4% market share, while a little-known startup named Uber must was likely just a concept in the mind of its founders. Fast forward a few years, and the landscape is utterly transformed. How does a titan like Nokia—once synonymous with mobile technology—fall to the depths of obscurity, while Uber skyrockets to a $100 billion valuation without owning a single car? This stark contrast begs the question: what separates organizations that adapt and thrive from those that stagnate and fail?

The Disruption Dilemma: Why Smart Companies Fail

As leaders wrestle with this dilemma, a haunting question keeps them awake at night: “How can we sustain our core businesses while innovating with new technologies?” Research from Harvard Business School's Clayton Christensen reveals a startling truth: 70% of established companies stumble when faced with disruptive innovation—not from a lack of resources but due to rigid structures that prioritize doing things better rather than discovering new paths.

Christensen’s DIT, published in 1997, has undergone significant changes since then. The definition of innovation has expanded beyond sustaining and disruptive innovation. But consider this: A recent BCG analysis finds that only 25% of corporate transformations yield lasting value. This means a staggering 75% of organizations are left grappling with the harsh reality of failure. As the world evolves with emerging technologies like AI and blockchain, the challenge lies not just in adopting these tools, but in rethinking how we integrate them into our core operations.

Key Architecture Design Patterns for Adaptability

Domain-Driven Architecture: Bridging the Gap

Imagine a world where engineers and business leaders converse fluently in the same language. Enter Domain-Driven Design (DDD), a methodology that fosters collaboration and ensures that applications are not only robust but also responsive to market dynamics. But what happens when we pair DDD with the Data Mesh framework? We create a decentralized data ecosystem where cross-functional teams take ownership of their data domains, turning data into a competitive advantage. When adopting frameworks like TOGAF, organizations can leverage Domain-Driven Design and the Data Mesh framework to ensure that their strategies reflect real-world data needs and insights. This alignment allows organizations to pivot quickly based on data-driven feedback, enhancing their adaptability in an increasingly complex landscape

Modular Architecture: The Flexibility Factor

Picture traditional IT systems as ancient Monolithic structures —sturdy but inflexible. Now, envision a modular architecture that allows for swift adjustments and innovation. Microservices empower teams to develop applications that are agile and responsive, while cloud-based infrastructure offers the scalability needed to meet ever-changing demands. This modular approach is the lifeblood of adaptive organizations, enabling them to pivot quickly in response to new opportunities.

Continuous Delivery and DevOps: The Engine of Agility

In a landscape that demands speed, Continuous Delivery (CD) and DevOps practices emerge as game-changers. These methodologies streamline the development process, ensuring that software is always production-ready. By adopting a culture of shared responsibility and continuous improvement, organizations can foster an environment where agility thrives. TOGAF’s ADM can incorporate continuous delivery principles by ensuring that architectural decisions support automated testing and deployment.

Agile and Lean Principles: Maximizing Value

Finally, organizations must embrace Agile and Lean principles tailored for data and software initiatives. By working in iterative cycles, teams can adapt based on user feedback, ensuring that every effort delivers maximum value with minimal waste.

Your Journey Begins Now

The question is not whether data, AI, and innovative software will disrupt your industry—they already are. To thrive like Uber or to learn from Nokia's fall, organizations must ask themselves: “How do we cultivate a culture of continuous evolution?”

What steps will you take to foster adaptability in your organization? The companies achieving exponential value are those that adapt effectively, not merely those with the latest technology.

Please share your thoughts, and let’s explore how we can build organizations that not only survive but thrive in the dynamic landscape of the future.

References

In addition to manual research, I have also used deep research involving LLMs in the writing process. The following are the references used.

Harvard Business School - Clayton Christensen's Theory of Disruptive Innovation

The Encyclopedia of Human-Computer Interaction - Disruptive Innovation

Taylor & Francis Online - Research on Innovation Adoption

ResearchGate - Change Management Failure Rates

The four pillars of Digital Synergy

The Problem: Fast Technology, Slow Organizations

As we saw on my last post, technology moves on S‑curves and exponentials. Organizations still move in quarters and calendar years. The primary obstacle to effective execution lies not in the absence of innovative ideas or tools, but rather in the inherent friction between the design of work and the dynamic nature of reality.

Common failure patterns:

Rigid annual plans that don’t have the provision to accommodate new signals.

“Big bet” initiatives that aim for perfection.

Knowledge that lives in heads or slides, not in systems that learn.

Automation pursued as cost-cutting, not capability‑building—eroding trust and creativity.

The consequence is a lot of activity with little momentum. The win isn’t just about speed; it’s about smart speed—velocity with direction, adaptability, and compounding learning.

My objective with this series is not to establish a new framework, but rather to identify an efficient method for extracting value more swiftly amidst the rapid pace of technological advancement in this era of agentic AI. I strive to strike a balance between embracing the wisdom of established frameworks, such as TOGAF or Zachman, and adapting them to our digital transformation initiatives. As we progress, you may observe me taking notes from these frameworks to explore practical applications in our digital transformation projects.

The Four Pillars (and Why They’re Ordered This Way)

Let’s order our four pillars in a way that is easy to remember and apply.

Adaptive capacity over rigid plans

Adaptive capacity refers to an organization’s ability to swiftly reallocate attention, resources, and capabilities in response to new information within a specified timeframe (e.g., 2-4 weeks for teams, 4-8 weeks for portfolios). To develop this organizational muscle, if you are following TOGAF, the Architecture Development Method (ADM) can be applied in shorter overlapping cycles. Additionally, architecture contracts can be defined to facilitate bounded change without full approval, and architecture records can be utilized as living artifacts.Innovation velocity over perfect execution

Innovation velocity measures the rate at which an idea transforms from a hypothesis into a tangible value that users can utilize. It is quantified by the number of validated learning events that occur within a specific time frame. To enhance innovation velocity, organizations can adopt strategies such as producing thinner slices of product, increasing feedback density, and leveraging validated signals to attract and retain investment. Instead of relying solely on traditional stage-gates, evidence gates can be implemented, which establish entry/exit criteria based on user outcomes and risk reduction.Continuous learning over completed projects

Continuous learning is the institutional, cross-project capture and reuse of knowledge that enhances the likelihood of future success. It’s not about training; it’s about building system memory. Treat every initiative as a learning asset. Document experiments, decisions, metrics, and retrospectives into a reusable knowledge graph. This approach allows the organization to reap compounding returns from both failures and successes. When applying the Zachman lens, map knowledge artifacts across Zachman cells to ensure completeness and reuse (e.g., business semantics in Row 2/Column “What,” system models in Rows 3–4). Standardize decision logs, experiment write-ups, and service patterns as reusable assets. Track the Reuse Rate by domain. Lastly, utilize knowledge graphs to connect decisions to outcomes, facilitating the discovery of root causes and patterns.Human–machine collaboration over automation alone

Human-machine collaboration is an intentional work design where humans set goals, constraints, and standards, while machines expand option sets, enhance pattern recognition, and increase throughput. Governance ensures safety and ethical considerations. AI and automation are used to augment human perception, judgment, and creation, not just replace tasks. Workflows are designed where humans define direction and meaning, while machines scale insight and speed. AI governance is applied to treat models, prompts, datasets, and evaluation harnesses as governed artifacts with lineage. Role-based controls and human-in-the-loop checkpoints are implemented based on risk levels.

Capacity enables velocity. Velocity feeds learning. Learning teaches where and how to collaborate with machines for leverage that lasts. The four pillars are mutually reinforcing when applied correctly

The Smart Speed Flywheel

Imagine your operating model as a flywheel with four interconnected stages:

Sense: Instrument your environment (customers, markets, operations) with leading indicators to gain insights.

Decide: Streamline decision-making processes by explicitly defining assumptions, thresholds, and “tripwires.”

Act: Launch the smallest valuable increments and scale only when supported by evidence.

Learn: Capture outcomes and tacit knowledge, enabling you to roll into the next sensing phase.

Repeat these loops weekly for project and program teams, monthly for portfolios, and quarterly for strategy. The tighter the loop, the faster compounding occurs.

Metrics That Matter

Time to First Value (TTFV) is the number of days from the commencement of a project to the receipt of the first measurable outcome.

Evidence Burn-Up is the cumulative number of validated learning events that occur over time.

Portfolio Vitality is the percentage of initiatives initiated or terminated within a quarter, indicating a healthy level of churn.

Reuse Rate is the frequency with which patterns or playbooks are utilized across teams.

Augmentation Delta is the change in cycle time or quality attributable to AI-assisted work.

Decision Latency is the average time taken from the receipt of a signal to the execution of a decision and subsequent action.

Track a small, stable set. Visualize weekly. Discuss monthly. Rebase quarterly.

Anti‑Patterns to Avoid

Re-baselining instead of re‑deciding.

Perpetual “tests” with no scale or stop criteria.

Rolling out platforms without redesigned workflows.

Retro notes that never change investment or behavior.

Removing people before capturing and codifying know‑how.

Closing thoughts

In this post, we took a closer look at the four Digital Synergy pillars. However, if you are like me, you might still be scratching your head, wondering, “Well, how can I apply these four pillars across my organization while maintaining synergy and still achieving smart speed and exponential value?” Well, that’s our next stop in the learning journey. Please stay with me while we learn and evolve together.

A bonus meta-prompt….

Application philosophies may vary across organizations of different sizes and industries. If you wish to compose a strategy report for your organization, the following prompt can be customized to your specific needs. Let me know how it goes

Title: Master Meta‑Prompt — Digital Transformation in the AI Age (Four-Pillar Synergy)

Objective

Produce a deep, practitioner-grade research study that defines, operationalizes, and integrates four pillars—Adaptability, Innovation Velocity, Continuous Learning, and Human–Machine Collaboration—into a coherent digital transformation strategy for organizations accelerating in the Agentic AI era.

Ground the study in recognized enterprise architecture and AI governance frameworks (e.g., TOGAF, Zachman, COBIT, NIST AI RMF, ISO/IEC 42001, OECD AI Principles, EU AI Act) and include realistic, fact-checked case studies with measurable outcomes.

Context and Scope

Organization type/industry: [insert industry/org size/region]

Time horizon: [e.g., 12–36 months]

Strategic goals: [e.g., revenue growth, cost-to-serve reduction, risk/compliance posture]

Constraints: [e.g., regulated sector, legacy core systems, data residency]

Assumptions: [e.g., cloud-ready, data platform maturity level, AI fluency baseline]

Key Research Questions

What are the foundational capabilities and architectural building blocks required to operationalize each pillar?

How do the pillars reinforce each other to create multiplicative value and defensible advantage?

What governance, risk, and compliance guardrails are necessary for safe and scalable AI-enabled transformation?

What metrics and leading indicators best measure progress and value realization?

What sequencing and operating model choices accelerate outcomes while managing change fatigue?

Pillars to Define and Operationalize

Adaptability: sensing, scenario planning, modular architecture, composable business, decision agility.

Innovation Velocity: idea-to-value flow, DevEx/MLEx, CI/CD/CT (continuous testing), platform engineering, FinOps.

Continuous Learning: data flywheels, experimentation, A/B and causal inference, learning organizations, skills academies.

Human–Machine Collaboration: task redesign, augmentation patterns, RACI with AI agents, safety-in-use, change management.

Methodology and Evidence Requirements

Use a mixed-method approach: literature synthesis, standards mapping, case study extraction, and metric design.

Cite at least [10–20] credible sources (industry reports, standards bodies, peer-reviewed, regulator guidance, vendor neutral sources).

For each factual claim, provide an in-text citation and a reference with a working link.

Prefer sources from the last [3–5] years; include seminal older sources where relevant.

Framework Mapping (must include)

TOGAF: Map recommendations to ADM phases (Prelim, A–H) and key artifacts (e.g., Architecture Vision, Capability Assessment, Roadmap, Architecture Contracts).

Zachman Framework: Classify core decisions across perspectives (Planner→Worker) and interrogatives (What/Data, How/Function, Where/Network, Who/People, When/Time, Why/Motivation).

COBIT 2019/2023: Tie controls to IT governance objectives (e.g., APO, BAI, DSS, MEA).

NIST AI RMF (1.0+): Map risks and mitigations across Govern, Map, Measure, Manage functions.

ISO/IEC 42001 (AI Management System) and ISO/IEC 23894 (AI risk): Align policy, roles, and continuous improvement.

OECD AI Principles and EU AI Act: Incorporate trustworthy AI principles and regulatory obligations by risk category.

Deliverables and Structure

Executive Summary (1–2 pages): key findings, value theses, and prioritized actions.

Diagnostic: current-state maturity across the four pillars with a heatmap.

Architecture and Operating Model Blueprint:

Reference architecture (logical) with domain boundaries, data plane, model ops, guardrails, integration patterns.

Operating model choices (centralized vs. federated platform, product-centric funding, autonomy with guardrails).

Pillar Playbooks:

For each pillar: outcomes, required capabilities, enabling tech/process, org roles, risks, and KPIs.

Synergy Map:

Show cross-pillar dependencies, compounding loops, and bottleneck removal strategy.

Case Studies (3–6 realistic, fact-checked):

Situation → Actions → Outcomes with metrics, timeline, investment, and lessons learned; note failures/anti-patterns.

Metrics & Value Realization:

Leading/lagging indicators, baselines, targets, and measurement cadence.

Governance & Risk:

Policies, controls, review boards, model lifecycle, data stewardship, human-in-the-loop checkpoints.

24-Month Roadmap:

Sequenced portfolio (waves/quarters), critical path assumptions, risk burndown, and change management plan.

Appendix:

RACI, glossary, decision logs, architecture artifacts, templates.

Evidence and Citation Style

Use APA or IEEE style plus inline links.

After each paragraph with facts, append bracketed citations like [Author, Year] and reference list with URLs.

Include a “Sources of Truth” section: standards docs, regulator guidance, annual reports, S-1s/10-Ks, peer-reviewed journals, CNCF/OSS docs.

Metrics Catalog (examples to tailor)

Adaptability: cycle time to pivot strategy; % services/components upgraded without dependency breaks; decision latency.

Innovation Velocity: lead time for change; deployment frequency; mean time to recovery (MTTR); model time-to-production; experiment throughput.

Continuous Learning: experiments per quarter; percent decisions backed by causal evidence; knowledge reuse rate; skill uplift index.

Human–Machine Collaboration: task completion time delta with AI; quality uplift; override/appeal rates; human-in-the-loop coverage; incident-free automation rate.

Business outcomes: revenue from new offerings; cost-to-serve; NPS/CSAT; risk loss events; regulatory findings.

Case Study Requirements

Include at least one from each: highly regulated (e.g., banking/health), industrial/IoT, and digital-native.

Each case: context, architecture choices, governance approach, pillar tactics, quantified results (e.g., “reduced cycle time 40% in 9 months”), and citations.

Encourage both success and failure lessons; include one “recovery” story where an initiative course-corrected.

Risk, Ethics, and Safety Coverage

Cover bias, privacy, IP leakage, model misuse, robustness, security (model, data, supply chain), and safety-in-use.

Define red-team and evaluation protocols; incident response for model failures; shadow AI detection.

Align to NIST AI RMF and ISO/IEC 42001 continuous improvement loop; map EU AI Act risk classes where applicable.

Operating Model and Change Management

Define product operating model (product trios, platform teams, embedded governance).

Role design: AI product owner, model risk officer, data steward, prompt engineer, human factors lead.

Incentives and funding: OPEX vs. CAPEX, product-aligned budgeting, value tracking.

Change adoption: stakeholder mapping, comms plan, just-in-time enablement, communities of practice.

Research Constraints and Guardrails

No unverifiable claims; avoid vendor hype.

Prefer primary sources (standards, regulators, company filings) before vendor blogs.

Clearly label assumptions vs. evidence.

When evidence is inconclusive, propose experiments to validate.

Output Formats

Provide: a) a narrative report (10–25 pages equivalent), b) a one-page executive brief, c) a slide outline, and d) a tabular KPI catalog.

Include visual descriptions for key diagrams (reference architecture, synergy flywheel, RACI), so they can be converted into slides.

Add an implementation checklist and a 90-day action plan.

Synergy and Multiplicative Value

Explicitly map feedback loops, e.g.:

Continuous Learning → better models → faster Innovation Velocity → improved Adaptability via modular releases → safer Human–Machine Collaboration with calibrated oversight → more data/insight → accelerates Continuous Learning.

Identify the system’s constraints (Theory of Constraints) and propose specific exploit–subordinate–elevate steps.

Quality and Verification Checklist (the model must follow)

Minimum [10–20] credible sources, with links and dates

All claims are cited; no dead links

Clear mapping to TOGAF ADM, Zachman cells, and NIST AI RMF functions

At least 3 cross-industry case studies with metrics

KPIs with baselines/targets and measurement frequency

Risks and mitigations tied to specific controls/policies

A sequenced 24-month roadmap with dependencies

Executive summary plus actionable 90-day plan

Distinguish assumptions vs. verified facts

Prompts to the Assistant (what you should do now)

Calibrate with me: ask 5–7 scoping questions about our industry, maturity, constraints, and goals.

Propose an outline customized to my context; await approval.

Conduct research, extract facts, and draft the deliverables with citations.

Iterate on case studies and KPIs to ensure relevance.

Finalize roadmap, governance, and change plan.

Optional Add‑Ons

Provide a RACI matrix for governance bodies (AI Steering Committee, Model Risk, Data Council).

Include a policy starter pack (acceptable use, data classification, model release, monitoring/SLA, incident response).

Offer a pilot portfolio: [3–5] use cases with clear ROI logic and risk grading.

Include an evaluation rubric to prioritize use cases (value, feasibility, risk, data readiness).

End of meta‑prompt.

References

The Open Group TOGAF: https://www.opengroup.org/togaf

The Zachman Framework: https://zachman-feac.com/zachman/about-the-zachman-framework

NIST AI Risk Management Framework (AI RMF): https://www.nist.gov/itl/ai-risk-management-framework

ISO/IEC 23894: Artificial Intelligence—Guidance on Risk Management: https://www.iso.org/standard/77304.html

Digital Synergy: The Multiplier Effect in the Age of AI

A Tale of Two Companies

Picture two companies standing at the edge of the same technological revolution. Both have access to cutting-edge AI tools, cloud infrastructure, and talented teams. Both invest millions in digital transformation. Yet five years later, one has achieved exponential growth—multiplying value, innovation, and market position—while the other struggles to keep pace, trapped in a cycle of perpetual catch-up.

What separates them isn't budget, talent, or even technology itself.

It's synergy.

When Technology Moves Faster Than Strategy

"By the time we finish our annual strategic planning, three new AI capabilities have emerged that fundamentally change what's possible. We're not just behind—we're playing a game where the rules rewrite themselves monthly."

This is the paradox of our age. Technology—particularly AI—evolves at an exponential pace, while traditional business planning operates on linear timelines - Annual strategies, Quarterly reviews, Monthly sprints. These rhythms made sense when technology was a tool we wielded. But what happens when technology becomes an environment we inhabit, one that's constantly reshaping itself?

The gap isn't between business and technology anymore.

The gap is between our pace of adaptation and the pace of technological possibility.

Pace of Tech Evolution

The Multiplier Effect: Beyond Addition to Multiplication

Here's where synergy transforms everything.

Traditional digital transformation follows an additive model:

Implement AI chatbot → improve customer service by 20%

Deploy analytics platform → increase operational efficiency by 15%

Automate workflows → reduce costs by 10%

Each initiative delivers value, but they operate in silos. The total impact is the sum of individual parts: 20% + 15% + 10% = 45% improvement.

But digital synergy operates on a multiplicative model:

AI capabilities that learn from customer interactions...

Feed insights into adaptive business models...

Which inform real-time strategic pivots...

Enabling teams to innovate continuously...

Creating feedback loops that accelerate learning...

Generating compounding value with each cycle.

Suddenly, you're not adding percentages. You're multiplying possibilities. 1.2 × 1.15 × 1.1 × continuous improvement = exponential outcomes.

This is the multiplier effect. And it doesn't happen by accident.

The New North Star: Adaptability Over Architecture

The companies achieving this multiplier effect have made a profound shift in mindset. They've stopped asking:

"How do we align technology with our business strategy?"

And started asking:

"How do we build an organization that can evolve as fast as technology does?"

This isn't about abandoning strategy—it's about strategy that breathes. It's about creating:

Adaptive capacity over rigid plans

Innovation velocity over perfect execution

Continuous learning over completed projects

Human-machine collaboration over automation alone

The goal isn't to predict the future of AI and position yourself accordingly. That's impossible when the future arrives monthly. The goal is to build an enterprise that can sense, respond, and evolve in real-time with technological change.

Four Pillars of Digital Synergy

The Human Element: Collaboration, Not Replacement

Here's what often gets lost in discussions about AI and exponential value: this isn't about machines replacing humans. It's about fundamentally reimagining how humans and machines work together.

The multiplier effect emerges when:

AI handles complexity at scale while humans provide contextual wisdom

Machines process patterns while people recognize meaning

Automation creates space for creativity

Technology amplifies human judgment rather than replacing it

The most successful organizations aren't those with the most advanced AI. They're the ones where humans and machines learn from each other, creating a collaborative intelligence greater than either could achieve alone.

The Journey Ahead

Digital synergy isn't a destination—it's a continuous state of becoming. It requires rethinking everything from organizational structure to decision-making processes, from talent development to technology architecture.

But most fundamentally, it requires a shift in how we think about the relationship between business and technology. Not as separate domains to be bridged, but as inseparable elements of a living, adaptive system.

In the posts ahead, we'll explore:

The "How": Practical frameworks for building adaptive capacity and innovation velocity

The "What": The specific capabilities, structures, and practices that enable digital synergy

But it all starts here, with a simple recognition: In the age of AI, the competitive advantage doesn't go to those who implement technology the fastest. It goes to those who can continuously evolve with it.

The question isn't whether your enterprise will face exponential technological change.

The question is whether we’ll create exponential value from it.

This is the first post in our Digital Synergy series. In the next installment, we'll dive into the practical tools for building organizational adaptability and innovation velocity.

What's your experience with the pace of technological change? Are you building bridges or building adaptability? Share your thoughts in the comments below.