Beyond the Chatbot: Achieving Radical Innovation Velocity in the Age of Agentic AI

In our previous post, we explored the first pillar of Digital Synergy: adaptability. The speed at which you adapt is equally important as the effectiveness of your adaptation. In this post, we shift our focus to the second pillar of Digital Synergy, which is innovation velocity. Innovation velocity can be defined as “the pace at which an organization can sustainably conceive, build, integrate, and scale new value-generating solutions.” It is the speed with direction that ultimately leads to synergy, rather than silos and projects that drain resources. To make the thought process relevant to the current needs of the industry, we will focus this post specifically on the most prominent of the applied GenAI patterns today - Agentic AI.

The Shift from 'Tool' to 'Teammate'

In his work, 'Agentic AI[1], Pascal Bornet defines 'Agentic AI' as "autonomous AI systems that can proactively and independently pursue complex goals". The strategic implication is profound: this is a shift from AI-as-Tool to AI-as-Teammate. An organization does not "run" a teammate like a piece of software; it delegates outcomes to it. This shift from "tool" to "teammate" is the single most important concept for understanding innovation velocity. It fundamentally breaks traditional IT governance, software development lifecycles (SDLCs), and "Human-in-the-Loop" (HITL) processes. The primary organizational bottleneck for Agentic AI is no longer development time; it is the willingness and ability to grant autonomy safely. Velocity becomes a function of trust.

This agentic velocity, however, would need a holistic end-to-end transformation built on three foundations.

Agent-ready Architecture: A move from deterministic, predictable workflows that call an LM as a tool for a specific task to a, dynamically adapting LLM-driven system for planning and executing tasks to achieve a goal [2]. The architecture involves a framework for an autonomous agent, allowing it to "perceive" and "act."

Agent-ready Operations: A shift from traditional MLOps to a new discipline of "AgentOps" for managing the lifecycle of autonomous entities. This is coupled with a cultural shift from "Human-in-the-Loop" (HITL) bottlenecks to "Human-on-the-Loop" (HOTL) governance, enabling trust and delegation.

Agent-ready Governance: A framework that redefines risk management. It moves beyond the false "speed vs. safety" trade-off by embedding ethical guardrails and compliance as automated code. This "Governance-as-Code" is the prerequisite for trust, and trust is the prerequisite for velocity.

The Trinity of AI: Decoding the Core Agent Architecture

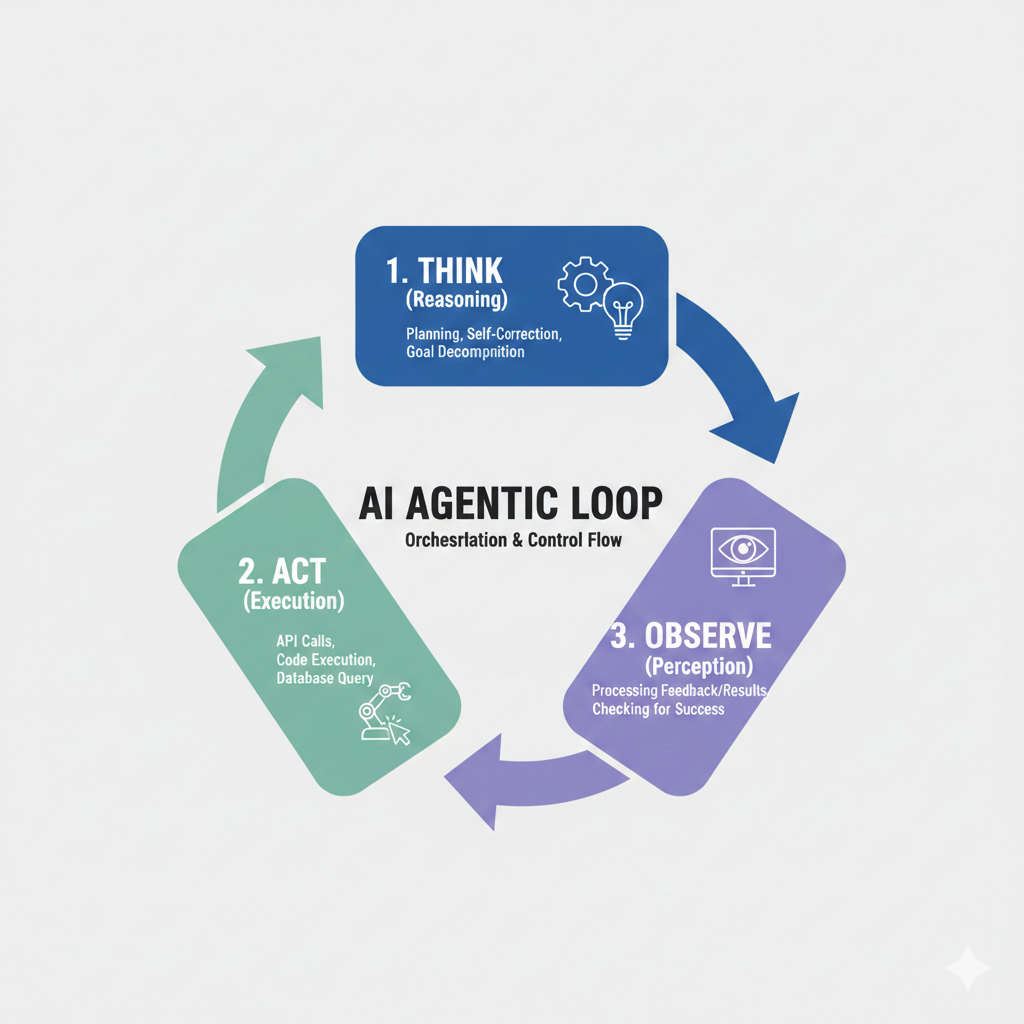

The core architecture of an AI agent is composed of three essential components: the Model, the Tools, and the Orchestration Layer. The Model, or the "Brain," is the central reasoning engine, typically a Language Model (LM), that processes information and makes decisions. The Tools, the agent's "Hands," are mechanisms like APIs, code functions, and data stores that connect the reasoning to the outside world for retrieving real-time information and executing actions. The Orchestration Layer, the "Nervous System," is the governing process that manages the entire operational loop, handling planning, memory, and deciding when to reason versus use a tool in a continuous "Think, Act, Observe" cycle. Together, this seamless integration allows the agent to move beyond content generation to autonomous problem-solving and task execution.

Think, Act, Observe Cycle

From MLOps to 'AgentOps': The New Management Stack

Agentic AI requires an "emerging discipline" known as "AgentOps". AgentOps is designed to manage the entire lifecycle of autonomous entities. The "asset" being managed is the agent itself—its goals, its memory, its state, its "toolbox" of APIs, and its collaborative behaviors with other agents.

This new stack of tools is the "factory floor" for building and scaling the digital workforce. Its functions are radically different from MLOps and include:

Orchestration: Managing complex interactions between multiple collaborating agents. This capability is deeply informed by frameworks originating from Google and Google DeepMind, which utilize "Societies of Agents" or "Debate Architectures." These approaches leverage a team of specialized agents—like a 'Coder Agent' and a 'Tester Agent'—to collectively solve problems, yielding higher quality and more robust outcomes than a single agent operating alone.

Monitoring: Tracking agent behavior, goal-alignment, and state, not just model accuracy. This includes monitoring for "emergent behaviors".

Governance: Enforcing rules, managing agent "memory," and ensuring autonomous actions stay within pre-defined guardrails.

Beyond Traditional Agile: From 'Tasks' to 'Missions'

For two decades, Agile and DevOps have been the gold standard for software velocity. However, their "rigid ceremonies" and "human-centric planning" are not designed for managing autonomous "teammates".

This new paradigm requires a shift in the fundamental "unit of work." In Agile, the unit of work is a "user story" or "task," which is estimated in "story points"—a proxy for human effort. This is obsolete when one of your "teammates" is an agent that operates at machine speed and does not "work" in story points. The new model is the "mission-based team". This is a hybrid team of human experts and AI agents, co-located to achieve a common goal. This team is not given a "backlog" of tasks; it is given a mission (e.t, "reduce customer churn by 5%," "decrease order fulfillment time by 15%").

New Governance: The 'Human-on-the-Loop' (HOTL) Model

"Human-on-the-Loop" (HOTL) model overcomes the disadvantages of the Human in the Loop (HITL) model, where a human must approve every critical action, which is the enemy of Agentic AI velocity. HOTL is a governance framework where humans set the 'rules of the road' (the guardrails), monitor agent performance, and only intervene on exception. HOTL shifts the human role from an approver (a bottleneck) to an overseer (a designer). The human "AI Safety & Governance Specialist" works with the team to define the "sandbox" within which the agent is pre-approved to operate autonomously. Velocity is thus a function of how well an organization can design, implement, and trust its HOTL frameworks.

Navigating High-Velocity Risk: Ethical Guardrails for Agentic AI

For Agentic AI, safety is velocity. The only way to unlock velocity is to directly address the fear of a ”rogue agent" violating data privacy, breaking laws, or being manipulated via a new attack surface. The solution is "Governance-as-Code - a framework of framework of "robust ethical guardrails and auditable compliance frameworks" is not a "nice-to-have" or an inhibitor. It is the central business case for the entire transformation. It is the mechanism that builds the organizational trust required to delegate autonomy. The governance rules—the ethical guardrails, the data privacy rules, the compliance frameworks, and the "agent alignment" checks—are not a document. They are code, "automated," and "embedded" directly into the AgentOps platform and the HOTL framework.

An agent cannot (is programmatically blocked from) accessing PII/PHI.

An agent cannot take a financial action above a certain threshold without automated flagging.

An agent's goals are continuously monitored for "alignment drift" (i.e., when an autonomous system optimizes for a metric that is unintended or harmful to the overall business goal).

By automating the guardrails, the organization eliminates the need for manual, speed-killing reviews.

Conclusion: The Velocity Imperative

The initial wave of Generative AI was about awe. The current wave of Agentic AI is about action.

Achieving innovation velocity today means moving beyond viewing AI as a tool for content creation and viewing it as a framework for autonomous execution. By leveraging the cognitive architectures—reasoning, planning, tool use, and collaboration—enterprises can stop just talking about innovation and start engineering it at scale.

References